PromptAI (Alpha)

PromptAI is Conviva's Generative AI-powered tool for conversational learning experiences to create, inspire, and enhance your Operational Data Platform curiosities.

Type your prompt in the question area or click one of the common questions to get started.

PromptAI responds with the relevant answer and any related data, such as metric values and time series widgets for the specified period.

Note: Conviva PromptAI is currently in limited Alpha deployment. Please reach out to your Conviva Account Representative for questions about PromptAI features and Alpha participation.

Note: This product incorporates Meta Llama 3, which is licensed under the Meta Llama 3 Community License found at: https://llama.meta.com/llama3/license/.

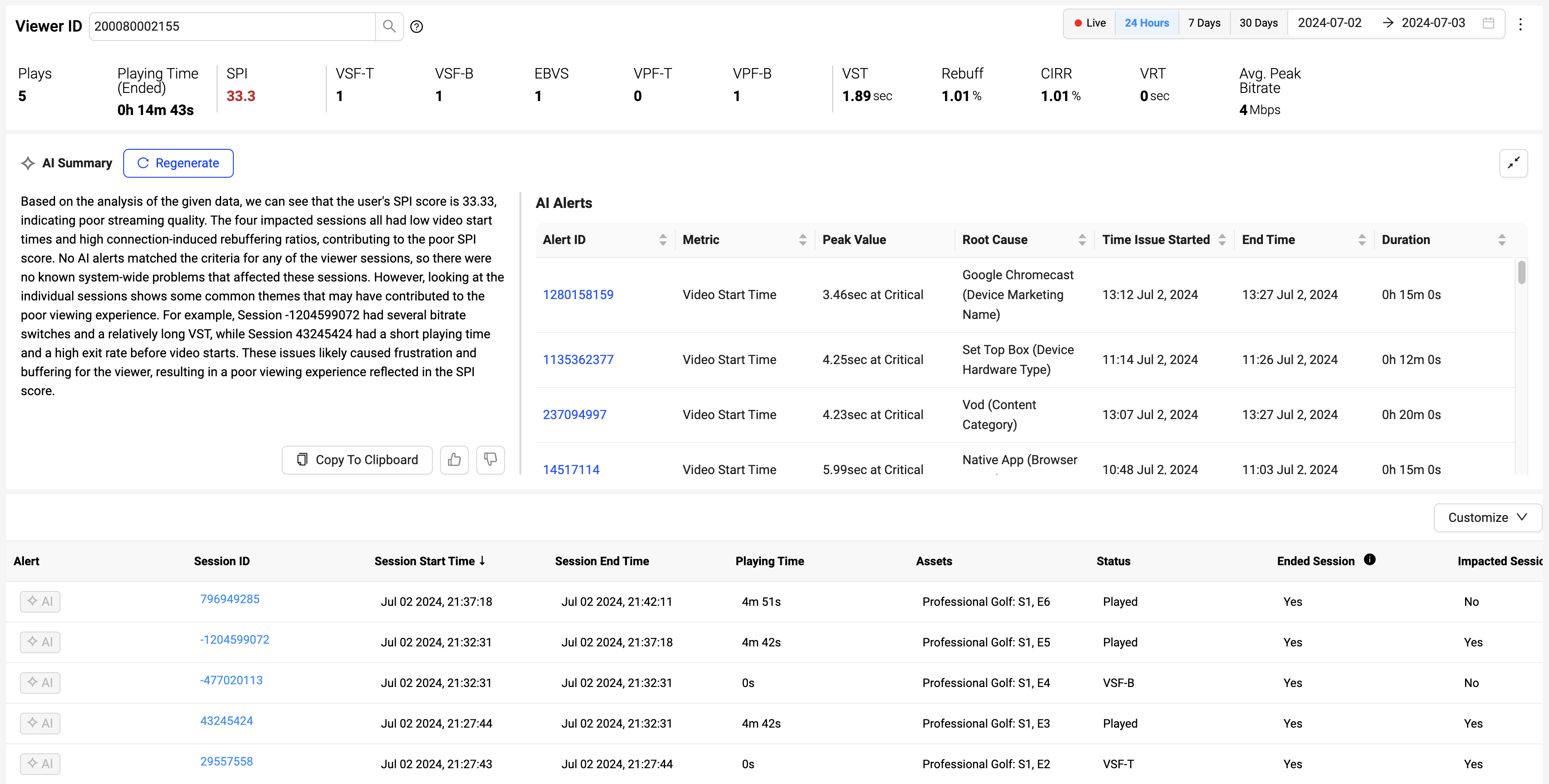

Viewer Module Summary for VSI

The AI Summary feature on the Viewer Module is a powerful tool that generates a comprehensive summary of the viewer experience, providing a deeper understanding of the video viewer data. This feature, which goes beyond tabular metrics and metadata, might be used cross-functionally across the organization, empowering you with valuable insights for various purposes.

Conviva employs intelligent algorithms to generate AI Alerts. In the VM AI Summary generation, we also utilize AI to explain sessions that may have been part of an AI Alert cohort based on specific time frames, root causes, and metrics.

For further analysis of AI Alert and session correlation, hover or click the AI Alert indicator (correlated to an Alert) in the session table, prompted by our explainable AI system, which allows you to review the alert's behavior and access detailed diagnostic information for confirmation.

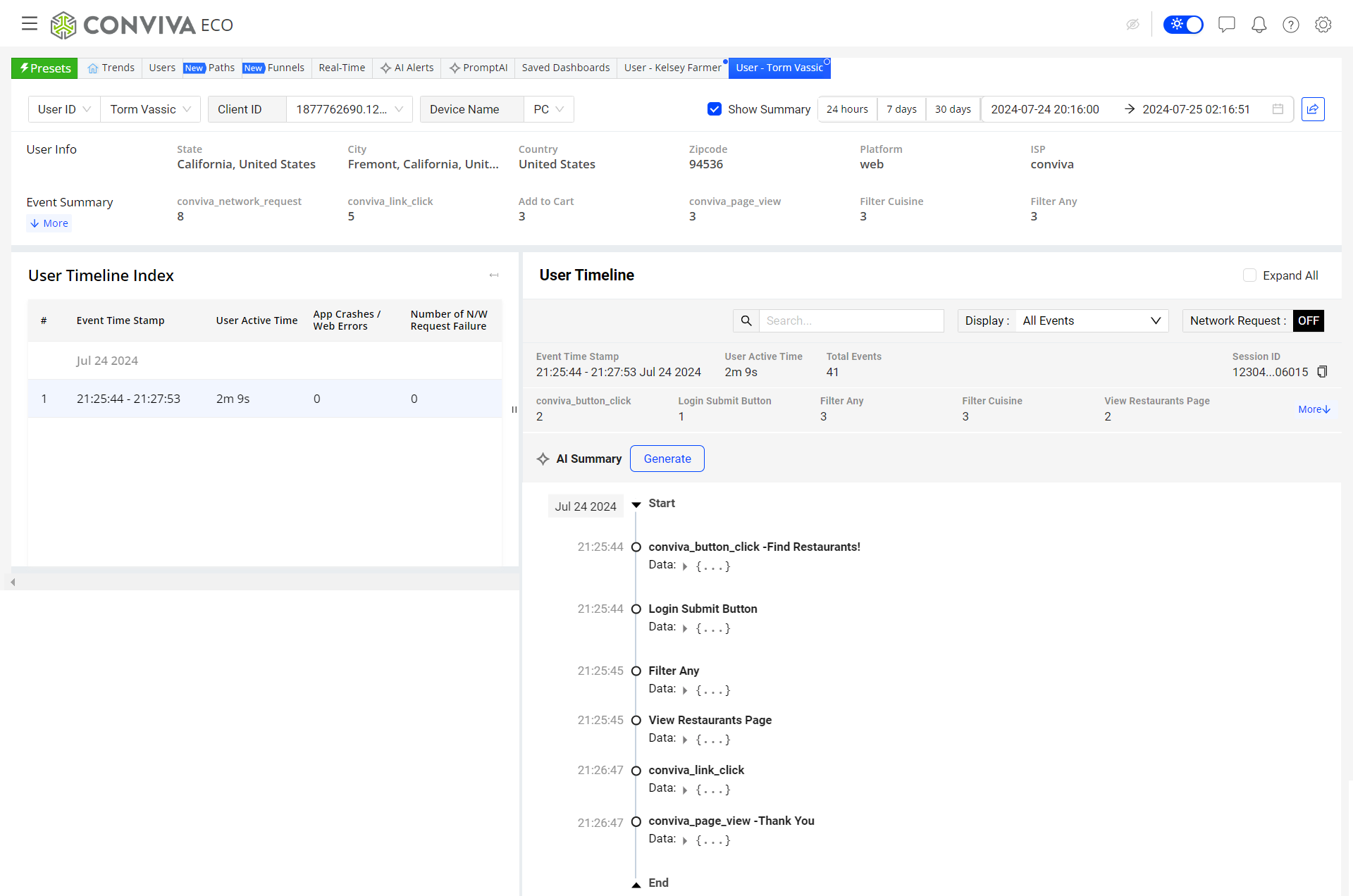

User Timeline Summary for DPI

The AI Summary feature on the User Timeline is a robust tool that generates a summary for the event time stamp of the User Timeline, providing a deeper understanding of the events in the selected timeline. This feature, which goes beyond tabular metrics and metadata, might be used cross-functionally across the organization, empowering you with valuable insights for various purposes.

You can also include the Network Request details in the user events in the AI summary by switching on the Network Request before the summary generation.

Conviva employs intelligent algorithms to generate AI Alerts. In the user Timeline AI Summary generation, we also utilize AI to explain sessions that may have been part of an AI Alert cohort based on specific time frames, events, and metrics.

Tips for Good Prompting:

When specifying questions, use full text wording with proper case, e.g., upper case for metric abbreviations, and provide a Thumbs Up and Thumbs Down rating on responses to help validate response effectiveness.

-

Enter full text searches

-

Avoid abbreviations, such as mgmt for management

-

Add question marks at the end of search text

-

Apply case-sensitivity, e.g., all caps for metric abbreviations

|

Recommended |

Avoid |

|---|---|

|

What is VSF-T? |

What is VSF-t |

|

What is CIRR? |

what is cirr |

|

How can I improve rebuffering issues? |

rebuffering? |

|

What is the difference between |

vsf b vs vsf t |

| How much CIRR occurred in the last 24 hours? | How much rebuffering occurred in the last interval? |

Note: Please click the Thumbs Up / Thumbs Down response rating to help us tune the model towards better responses.

FAQs

What is an LLM (Large Language Model)?

An LLM is a type of artificial intelligence model that is trained on vast amounts of text data to generate human-like text based on the input it receives. It is capable of understanding context and generating coherent and contextually relevant responses.

How do LLMs like PromptAI work?

LLMs like Conviva PromptAI use a deep neural network architecture called a transformer to process and generate text. They learn patterns and associations in the training data, enabling them to generate and predict text that appears natural and coherent.

What does Conviva use to train the LLM?

Conviva uses only data from Conviva content, such as the Conviva Learning Center, to train PromptAI.

How does PromptAI know about Conviva features?

We have uploaded the Conviva Learning Center content into our LLM model, so PromptAI learns the product content and may combine that knowledge in ways not thought of before!

However, other public LLMs t outside of Conviva will not have the in-depth knowledge of Conviva product features and capabilities that PromptAI has.

Our LLM may also incorporate other content external to Conviva, so PromptAI may also attempt to answer questions that go beyond specific Conviva products.

Why does the response contain " I don't know..."?

Occasionally, the PromptAI response will start with “I don't know”. This response indicates PromptAI cannot find a definitive answer. Try rewording the question to focus on a known feature, metric, or capability.

Can PromptAI return metric values?

Yes, PromptAI can respond with metric values and time series widgets for specified periods in response to metric questions, such as 'How much rebuffering occurred yesterday?' and How many Concurrent Plays happened last week?'.

What are some limitations of LLMs?

LLMs may also produce plausible-sounding but incorrect information. The models may also generate biased or inappropriate content, and require significant computational resources to operate.

If 100% accuracy is required for your needs, it is best to check the Conviva dashboard from which the data can be verified.

How accurate is PromptAI in generating a response?

PromptAI accuracy depends on several factors such as the quality and size of the training data, the specific task or application, and how well the model has been fine-tuned. However, the model may occasionally produce inaccurate information, biased content, or content that is not relevant to Conviva and our applications.

Your utilization of PromptAI and your thoughtful feedback is critical to improvement. Please use the Thumbs Up and Thumbs Down icons to submit your feedback rating.

If you have any additional questions or concerns, you can always reach out to Conviva Support at support@conviva.com.

What are some applications of LLMs and generative AI tools?

LLMs are used in various applications, including natural language understanding, chatbots, content generation, language translation, sentiment analysis, and more. They can be applied in industries such as healthcare, finance, customer support, and content creation.

The utilization of LLM in a data-aware context provides even more value than the typical applications listed above because data can increase information context, accuracy, and certainty.

What is fine-tuning in LLMs?

Fine-tuning is a process during which pre-trained LLMs are further trained on specific data sets or tasks to adapt them to particular applications or domains. This helps improve their performance in specialized tasks.

How is feedback used?

In PromptAI, we do not directly apply user feedback to tune our LLM model. Rather, we routinely audit feedback to control the accuracy and desired results of fine tuning, without compromising privacy, ethics concerns, or user bias.

Prompt AI Prompt AI